Boot time measurement: before and after optimization

Target board: BeagleBoard BeaglePlay (TI AM62x) Goal: measure and reduce end-to-end Linux boot time (kernel + userspace) with a repeatable methodology.

Summary

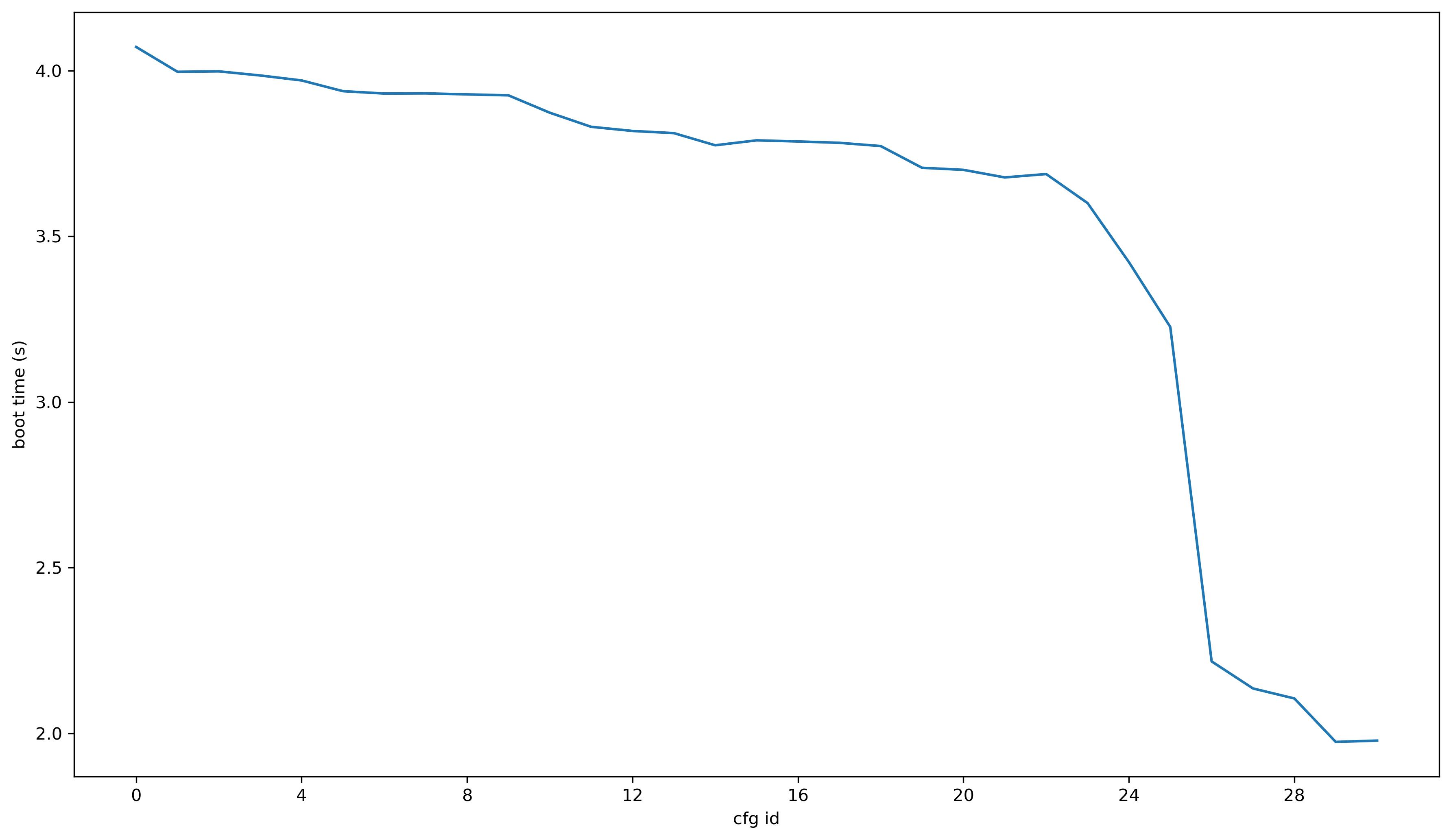

- We evaluated 31 Kernel configuration iterations (snapshot

00.0→30.0) and ran 41 cold‑boot measurements per snapshot (N = 41), recordingkernel_time_s,userspace_time_s, andtotal_time_s. - Average total boot time improved from 19.273 s (cfg 00.0) to 15.943 s at best (cfg 29.0) — an improvement of −3.33 s (−17.3%).

- Kernel phase dropped from ~4.07 s → ~1.97 s, while userspace fell from ~15.20 s → ~13.97 s; by the end, userspace dominated (~87.6% of the total at cfg 29.0).

- Kernel Image size decreased from ~34.15 MiB (cfg 00.0) to ~12.02 MiB (cfg 29.0) and ~11.96 MiB at cfg 30.0, which correlates with—though doesn’t by itself guarantee—faster kernel bring-up.

- 95% confidence intervals (mean total time) were tight: ±0.083 s at cfg 00.0, ±0.024 s at cfg 29.0, ±0.035 s at cfg 30.0.

Please note that you can compressed both Kernel and rootfs to reduce the size but it may will have some consequences on the boot time (regarding the chosen algorithm).

Definitions of what we mesure

- kernel_time_s — time spent in the kernel from boot hand‑off to userspace.

- userspace_time_s — time from userspace start to the target “system ready” state (as defined by the test script).

-

total_time_s —

kernel_time_s + userspace_time_s.

These fields come from the measurement JSON produced by the test harness, aggregated per kernel configuration (

XX.0) over 41 runs.

Hardware & context

- Board: BeaglePlay (TI AM62x).

- Storage / OS: standard BeaglePlay Linux userspace unless otherwise noted.

-

Kernel: configuration snapshots

00.0…30.0with a progressive slimming of the Image.

Methodology

-

Repeatability — for each config

XX.0, perform 41 cold boots (indices00…40) to amortize variance from storage, thermal, and scheduling jitter. -

Collection — the test harness stores a JSON object with per‑boot times plus kernel

Imagesize. Example (abridged):{ "00.0": { "00": {"kernel_time_s": 4.08, "userspace_time_s": 15.112, "total_time_s": 19.193}, ... "40": {"kernel_time_s": 4.06, "userspace_time_s": 15.804, "total_time_s": 19.864}, "rpm": {"kernel_Image_file_size_bytes": 35813888, "kernel_Image_file_size_Mo": 34.15478515625} } } -

Statistics — for each image version (

XX.0), both mean and standard deviation may be computed:

Results

Headline numbers

-

First snapshot (00.0):

kernel4.072 s,userspace15.201 s,total19.273 s (95% CI ± 0.083 s).

Kernel Image 34.15 MiB. -

Best snapshot (29.0):

kernel1.974 s,userspace13.968 s,total15.943 s (95% CI ± 0.024 s).

Kernel Image 12.02 MiB. -

Latest snapshot (30.0):

kernel1.978 s,userspace13.995 s,total15.974 s (95% CI ± 0.035 s).

Kernel Image 11.96 MiB.

Shift of bottleneck. Early on, kernel ≈ 21.1% of total; by cfg 29.0, kernel ≈ 12.4%, so userspace dominates and should be the next focus.

Fastest configurations (top‑5 by mean total time)

| cfg | kernel mean (s) | userspace mean (s) | total mean (s) | total SD (s) | kernel Image (MiB) |

|---|---|---|---|---|---|

| 29.0 | 1.974 | 13.968 | 15.943 | 0.080 | 12.02 |

| 30.0 | 1.978 | 13.995 | 15.974 | 0.115 | 11.96 |

| 27.0 | 2.136 | 14.290 | 16.426 | 0.238 | 16.34 |

| 28.0 | 2.105 | 14.385 | 16.491 | 0.370 | 15.63 |

| 26.0 | 2.217 | 14.282 | 16.500 | 0.256 | 16.98 |

boot_time means by kernel configuration

The following table lists the mean kernel boot phase (boot_time, in seconds) per configuration, rounded to 2 decimals.

| cfg | boot_time mean (s) |

|---|---|

| 00.0 | 4.07 |

| 01.0 | 4.00 |

| 02.0 | 4.00 |

| 03.0 | 3.99 |

| 04.0 | 3.97 |

| 05.0 | 3.94 |

| 06.0 | 3.93 |

| 07.0 | 3.93 |

| 08.0 | 3.93 |

| 09.0 | 3.93 |

| 10.0 | 3.87 |

| 11.0 | 3.83 |

| 12.0 | 3.82 |

| 13.0 | 3.81 |

| 14.0 | 3.77 |

| 15.0 | 3.79 |

| 16.0 | 3.79 |

| 17.0 | 3.78 |

| 18.0 | 3.77 |

| 19.0 | 3.71 |

| 20.0 | 3.70 |

| 21.0 | 3.68 |

| 22.0 | 3.69 |

| 23.0 | 3.60 |

| 24.0 | 3.42 |

| 25.0 | 3.23 |

| 26.0 | 2.22 |

| 27.0 | 2.14 |

| 28.0 | 2.11 |

| 29.0 | 1.97 |

| 30.0 | 1.98 |

Conclusion about our boot time measures

- Kernel slimming works — removing drivers/features and selecting faster compression cut the Image from 34.15 → 12.02 MiB and the kernel phase from ~4.07 → ~1.97 s, a major win on AM62x.

- Userspace now dominates — at ~13.97 s, userspace is ~7× longer than the kernel at the best snapshot. Prioritize device discovery, filesystem mounts, network init, and service startup.

- Variance is low — with 41 runs per snapshot, 95% CIs are tight (e.g., ±0.024 s at cfg 29.0), so changes ≥ 300 ms are meaningful.

Comparison to other ARM boards

- BeagleBone Black (AM335x): community reports show tuned boots around ~13.1 s (4.09 s kernel + 9.02 s userspace) and a range into the 20–50 s territory depending on image and services.

- Atmel SAMA5D3 Xplained (ARM, Linux 3.10): Bootlin’s measurements show init start ~7.9 s, with filesystem choices affecting early userspace timing. These are older numbers but useful to highlight what’s possible with lean stacks.

- TI AM62x family: TI’s SDK documentation outlines specific AM62x boot-time tactics (e.g., initrd removal, service trimming, storage tuning, Falcon-mode-like strategies in U-Boot), which map well to BeaglePlay.

Note: cross-board figures are not apples to apples—storage, clocks, device trees, rootfs, and target criteria all differ—so use them as directional references.

Practical guide to reduce userspace time on BeagleBoard BeaglePlay

Low‑hanging fruit

- Remove the initramfs if your kernel has required drivers built‑in; this often shaves seconds on Sitara/AM62x.

- Turn off unused systemd services (demos, extra networking, logging bloat).

- Trim udev and device discovery: blacklist unused modules; defer cold‑plug where safe.

Kernel / bootloader

- Build‑in essential drivers (MMC, rootfs, console), use a lean DTB, and choose a faster compression (e.g., LZ4 often boots faster than gzip on ARM).

- Consider SPL → Linux (Falcon‑style) paths if appropriate to skip the full U‑Boot menu stage.

Filesystem & storage

- Use a read‑only rootfs for production, tune mount options, and pick a filesystem aligned with your workload to reduce early userspace cost.

Service orchestration

- Profile with

systemd-analyze blameandcritical-chain; start only what’s needed for your “ready” criterion; mark non‑critical services asType=idleor order them after your target.

How to reproduce these measures

- Run many boots per config. This dataset uses 41 boots per snapshot, which is a good minimum for tight confidence intervals.

- Aggregate to means/CI. Report mean/SD/min/max and 95% CI per snapshot; treat deltas below ~100–200 ms with caution.

- Graphing. Prefer integer ticks on the X‑axis (config indices) and export at high resolution:

Appendix: selected per‑config summaries (means over N = 41)

- 00.0: kernel 4.072 s, userspace 15.201 s, total 19.273 s; Image 34.15 MiB.

- 29.0 (best): kernel 1.974 s, userspace 13.968 s, total 15.943 s; Image 12.02 MiB.

- 30.0 (latest): kernel 1.978 s, userspace 13.995 s, total 15.974 s; Image 11.96 MiB.